A Dose Of AI Reality

This week I had the pleasure of moderating a panel at the CSCMP EDGE 2023 conference in Orlando, and while there I had the chance to hear a keynote by Missy Cummings, PhD on the subject “AI Reality Check: Truth vs. Hype in the Stampede Towards AI”. Dr. Cummings is the director of Mason’s Autonomy and Robotics Center (MARC) and a professor at George Mason University. She recently advised the Biden administration on autonomous vehicle policies.

A topic like that was bound to draw my attention because the RSR team has been trying to tamp down all the wild-eyed enthusiasm about AI. While it’s a great new tool for analyzing data and creating important insights to help retailers respond more quickly to the dynamics of the marketplace, we also caution our advisory clients to not get too far out over their skis by thinking of it as something so magical that it can solve all the world’s problems.

So Dr. Cummings’ real talk about AI and GenAI was important to hear, especially at a conference where there was a lot of discussion about autonomous vehicles, specifically trucks. Ironically, on the very same morning of Dr. Cummings’ address to the conference, the San Francisco Chronicle reported a story about a pedestrian accident in the city involving a Cruise driverless car. San Francisco has allowed driverless taxis operated by Cruise and Waymo since 2022, and to date, 241 accidents have been reported. While some are funny, others (like the one above) are tragic, and have called into question the wisdom of pushing autonomous vehicles onto the roadways. Nonetheless, the experiment is expanding to places like Houston and Austin.

Dr. Cummings is no fan of self-driving vehicles, and started off her presentation by saying, “I’ve got bad news. If you are betting the farm on self-driving trucks as part of your supply chain strategy, you’re in serious trouble. I’m not saying we’ll never get there, but we’re not anywhere close.”

We’ll get to that in a moment. But first, the professor outlined the differences between “rules-based AI”, “neural networks”, “Generative AI” (GenAI), and “Large Language Models” – all terms that get bandied about thoughtlessly in the consumer media world. According to the professor:

“Rules based”, or “good old AI” should be familiar to most businesspeople, because we use it every day (IF/THEN/ELSE rules or decision tree logic very frequently embedded into code).

“Neural networks” are AI engines that can sift through large datasets of often unstructured data to find statistical relationships between those data. This kind of analysis can yield a-ha! revelations that can be important, i.e., when two things happen, there’s a high likelihood that another thing will happen too.

GenAI is based on “Large Language Models”, and Dr. Cummings claimed, “that’s not AI at all, because it cannot come up with something it has not seen before”. Large Language Models are presented with content that is tagged on such a way that the model can be trained from it. There are stories in the media lately about crowded tech shops in Africa and Asia, full of people tagging content of all kinds to be consumed by Large Language Models. But Dr. Cummings pointed out that there’s an inherent risk of model degradation, since GenAI uses those models to generate more new content, which in turn gets tagged and used again to retrain the models (creating kind of an “unvirtuous” cycle of poorer and poorer information).

But again, GenAI and the underlying Large Language Models can’t make stuff up – they can only use what is “seen”.

Here’s how that affects driverless vehicles. Cummings told the story of how an autonomous vehicle in Charlestown Massachusetts recently stopped dead in its tracks rather than maneuver around a delivery truck that was in an intersection (if you’ve ever driven in Charlestown, you know how treacherous conditions can be anyway!). Here’s what was happening:

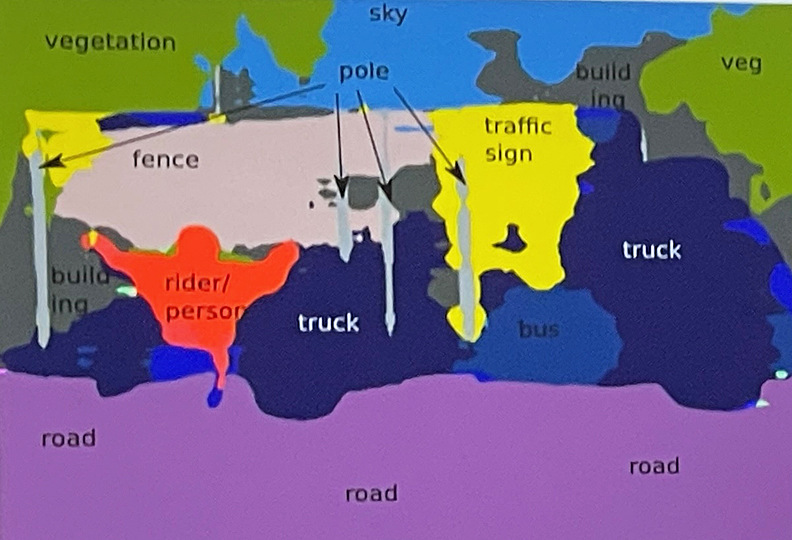

But this is what the “brain” driving the autonomous vehicle saw:

The vehicle didn’t know what to do, so it just stopped working and blocked the street. What happened was that the model hadn’t been trained to encounter a truck with an image of a big guy on its side, so it got confused. Clearly, it was NOT ready for prime time, according to Dr. Cummings.

What To Do?

Dr. Cummings shared a graphical depiction of when GenAI makes sense and when it doesn’t, a bottom-up vs. top-down comparison. According to the professor, for tasks where the skills required and rules are well defined and “uncertainty” is low – those tasks are excellent candidates for GenAI (for example, robots on an assembly line). When tasks where knowledge and expertise are vital and uncertainty is high, GenAI is not ready for prime time (for example, maneuvering through difficult traffic).

Although Dr. Cummings would not commit to saying that driverless trucks will “never” work, she opined that they “are not anywhere close”. She added, “maybe not in my lifetime!”

The professor provided the audience with a set of guidelines for companies that want to pursue the idea of using GenAI to automate decision making:

- Follow the Money. We’re in a hype cycle right now – pay attention to who has the most to gain from all the hype surrounding this new technology.

- Watch out for “regression to the mean” especially in safety-critical systems – that can lead to “inappropriate code” that in turn could lead to disaster.

- Cyber-security must be a top priority; misinformation corrupts models.

- An AI workforce must be developed to manage risks and maintain AI models. This is a process – not a project.

Just like all the revolutionary technologies that have come along over the years, AI shows tremendous promise – and creates a lot of risks. Technologists are pushing the boundaries of what it could do before the risks associated with it are fully understood so that guardrails can be established. But for retailers, practicality is the key – there’s a lot of new data available that can help retailers be much more responsive and efficient than ever before – before we start talking about sentient machines.

So RSR’s advice? It’s very close to what Dr. Cummings had to say: every tech applied to business processes follows a predictable path to maturity, from “no capability”, to “inconsistent” and “inefficient”, and then on to “competent”, “performing” … and finally to “differentiating”. Take your time, don’t skip steps, and get it right.